Porting to LINUX OS

Questa pagina è disponibile esclusivamente in inglese

The prototype requires the usage of a server that

- acts as Network Interface between synchronous and asynchronous domain

- schedules the sending of packets at the right time (TDP functionalities)

The development of first releases of TDP system was based on FreeBSD kernel version 4.8, and than was ported to newer kernel version till the version 6.1. Even if the various versions improve the quality of the service, reducing the delay in the transmission of the packed derived from the interference of the system interrupts, the results obtained form this system are not totally satisfying.

The ultimate goal of this section of the work is to perform the TDP capabilities over a REAL TIME Operational System. The optimal solution is a HARD REAL TIME OS, and for this purpose the better solution we evaluated is a Linux system (e.g. Debian or Ubuntu Distribution ) with a patch developed by Ingo Molna: CONFIG_PREEMPT_RT. Further general information about Real Time Linux could be found here.

The main features of the patch are:

- to force Linux OS becomes an HARD REAL TIME OS

- to reduce to the minimum the parts of the kernel that are non-preemptible.

A detailed description of the TDP software and how it works can be found in this website and in details in the section related to the publication, here we provide and overall (high level) description of it. Last version of the TDP router works on FreeBSD Unix OS with kernel version 6.1 and it is based on two main blocks:

- Shaper. It is the input process that

- takes the incoming traffic from the asynchronous interfaces

- puts the packet in the correct queues to allow the TDP sends them in right time toward the synchronous domain

- TDP. It is the manages of the output traffic (toward the synchronous domain): it analyses the enqueued packets by the Shaper and sends them in the right time accordingly to the global synchronization that is provided by the GPS signal.

The porting to Linux OS could be done in different way; one of the possible solutions is to use native functionalities of Linux OS for the scheduling of the packets and the generation and the management of queues. These goal could be performed by the use of:

- the firewall. It is devoted to the management of the incoming traffic: it treats the incoming packets accordingly to predefined rules.

- the Q.o.S Linux system. It could be used to build queues that could store the packets before they are sent to the network.

The second goal of this task is related to the porting of the TDP itself. To obtain a first version in a short time, we could try to implement functionalities related to the outer section: the section that involves the outgoing section of the system. To obtain this goal we suppose all the incoming traffic is synchronous. This is one of the shortest ways to test the efficiency of the system even if it is not complete. For this purpose, we need to modify the following TDP main files developed for FreeBSD systems: tdp.c,tdpdata.h, tdp.h. Furthermore, we need to modify the Linux Kernel that is devoted to the mechanism for sending the packets through the network interface card.

Another goal of the project is to structure the time driven system to became independent from the network interface vendor (so vendors’ drivers) and the nature of NIC: copper or fiber.

All the previously mentioned mechanisms are bound by synchronization in the time domain. The synchronization in Linux OS follows the same rules in FreeBSD OS: the signal is provided by a GPS receiver (Symmetricom or all-in-one solution).

Real Time Linux OS Patch (CONFIG_PREEMPT_RT)

The CONFIG_PREEMPT_RT patch provides additional features to the CONFIG_PREEMPT patch.

- CONFIG_PREEMPT patch

It renders much of the kernel code preemptible, with the exception of- spinlock critical sections,

- RCU read-side critical sections,

- code with interrupts disabled,

- code that accesses per-CPU variables, and

- other code that explicitly disables preemption.

- CONFIG_PREEMPT_RT

It is a patch developed by Ingo Molnar and it introduces additional preemption, allowing most spinlock (now "mutexes") critical sections, RCU read-side critical sections, and interrupt handlers to be preempted. A few critical spinlocks remain non-preemptible. The main strengths are the excellent scheduling latencies, the potential for hard realtime for some services (e.g., user-mode execution) in some configurations.

From an high point of view, the CONFIG_PREEMPT_RT patch converts “normal” Linux OS into a fully preemptible kernel. In details, to obtain this objective, the patch modifies to the basic Linux kernel with the following points:

- The in-kernel locking primitives now are implemented with rtmutexes and became preemptible.

- The original critical sections are now preemptible, but it's still possible to write non preemptible code.

- The interrupt handlers are converted into preemptible kernel threads.

- The Linux timer API are converted into infrastructures for high resolution kernel timers.

The this patch aims as final goal the reduction of the response time to the interrupts: we need to control as precise as possible the time the system takes to react and to manage/serve an interrupt. In non-RT system the response time to an interrupt is not fixed and even if the upper bound of response time is provided, it is not enough small for applications that work fast and require fast reaction (in time domain).

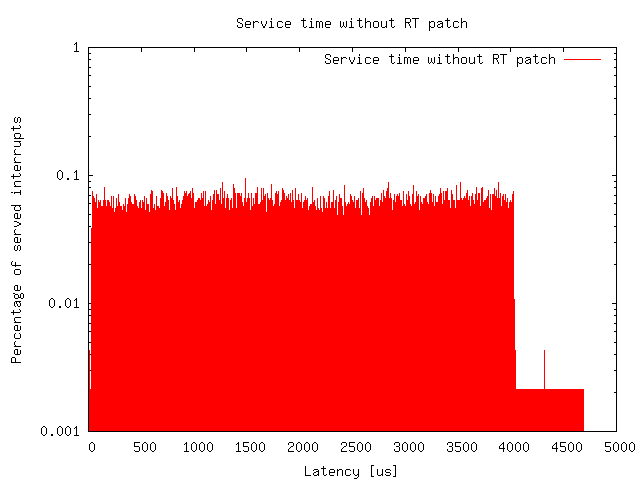

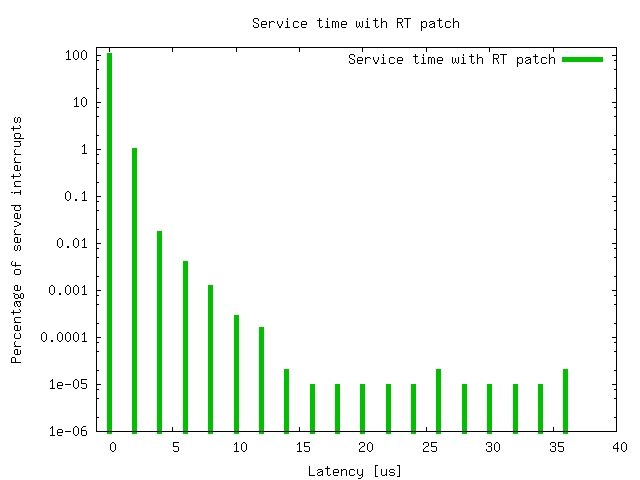

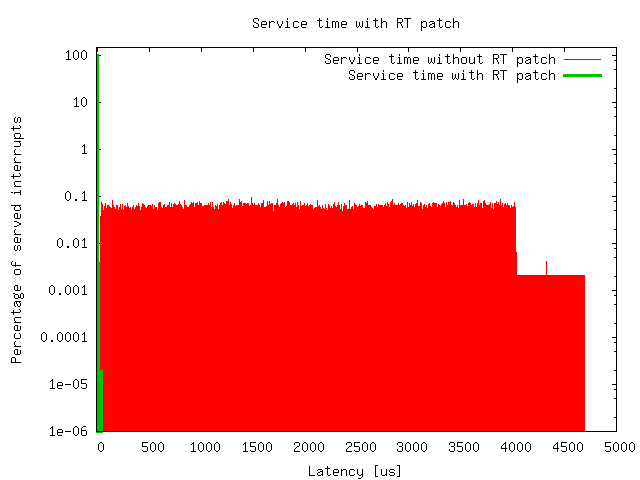

The following figures show the results of a preliminary test to compare the performance of RT and non-RT system. The test is performed on two OS installed on the same machine and it is done with a benchmark tool that acquires timerjitter by measuring accuracy of sleep and wake operations of highly prioritized threads. The results are shown in Figure 1 (results for non-RT system) and Figure 2 (results for RT system). Figure 3 shows the comparison of the two system performances (to underline the latency upper-bound of the two systems).

|

| Figure 1:Percentage of served interrupts vs latency time in NON-RT system. |

|

| Figure 2:Percentage of served interrupts vs latency time in RT system. |

|

| Figure 3:Comparison of percentage of served interrupts vs latency time in RT and NOT-RT system. |

|

Pages hosted by "IP-FLOW Group" - DIT - Università di Trento - Italy. © IP-FLOW Project 2004, All Rights Reserved. Last updated: 2008-09-10 05:37:05 |

![[Inglese]](images/italy.gif)